&imagePreview=1

&imagePreview=1

Written by Tony King-Smith / Posted at 2/11/20

Production L2-L3 ADAS begins with hardware

Flexibility is costly

The software solutions developed for top-tier ADAS systems are extremely complex. To achieve highly automated functions developers rely on a wide range of methods from traditional algorithms through increasingly sophisticated decision trees, to artificial intelligence. This essentially means a huge variety of mathematical calculations are being carried out again and again in automated driving software tens of times every second.

While this is a challenge in itself, it's a well known one. Even performing just slightly specialist tasks, such as displaying a touchscreen user interface, requires a wide variety of complex calculations to be performed. This is why we rely so heavily on CPUs and GPUs.

While CPUs were always designed to be general-purpose, GPUs – which were originally designed only to compute graphics algorithms – are extremely flexible and programmable for a growing number of different use cases. However, flexibility always comes at a significant cost – both in complexity and size of chips, and the power they consume.

The latest smartphones have achieved spectacular balance between flexibility and low power. So, what makes driving automation different? Two things, first, the requirements of the automotive use case, and second, artificial intelligence (AI).

General purpose and powerful, yet their power consumption, size and heat generation are all issues for automotive depoyment.

General-purpose and powerful, yet their power consumption, size and heat generation are all issues for automotive deployment.

Automotive isn’t about flexibility

Neither piece of the puzzle is to be ignored. Long lifetime continuous operation, demanding thermal environments, limited power supply, small size requirements and tough cost targets as well as demanding automotive safety standards are a package unique to automotive. And above all, lives are at stake.

On the other hand, artificial neural networks (NNs) have large processing demands and have to run with minimal latency in a vehicle, to make decisions fast enough to handle all possible situations in a safe and timely manner.

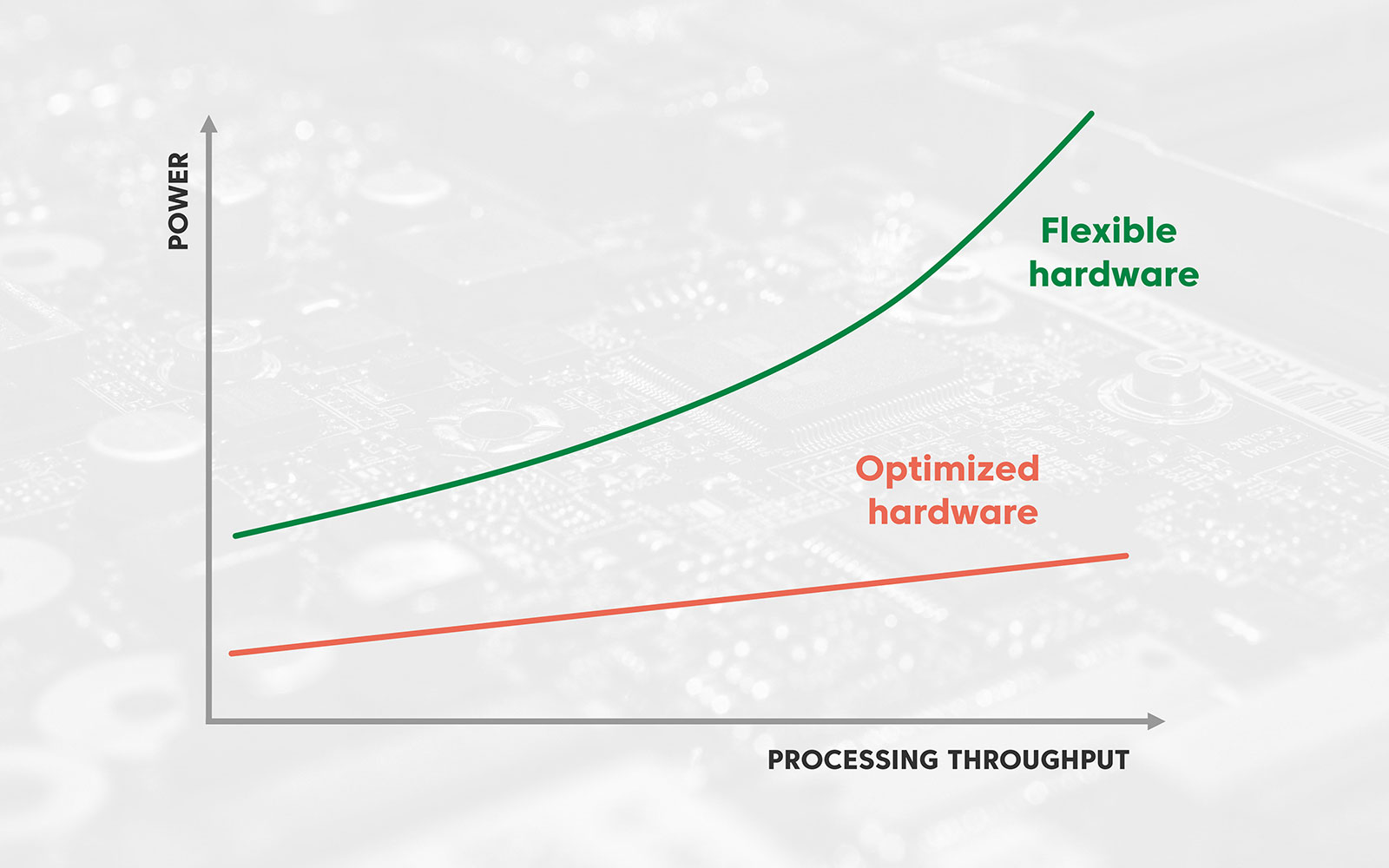

It seems the laws of physics are stacked against a realistic production solution. The more advanced the AI, the more computation it needs – and calculating NNs is well-known for being extremely demanding on processing power. Generally, more processing power means larger electrical power draw, which must be provided somehow from the vehicle. More power means generating more heat, which needs to somehow be dissipated in operating conditions that may reach more than 100 degrees C. Increasing processing power usually results in spiraling power and thermal demands, as well as cost, which threaten high volume mainstream deployment.

Fortunately, the opposite is also true: the less power needed, the less challenging the power and thermal systems are. The most effective way of reducing power? Optimized hardware platforms.

Optimization ensures that hardware gives more processing throughput while drawing less power, a must-have for automated driving.

Dedicated solutions are leading the charge

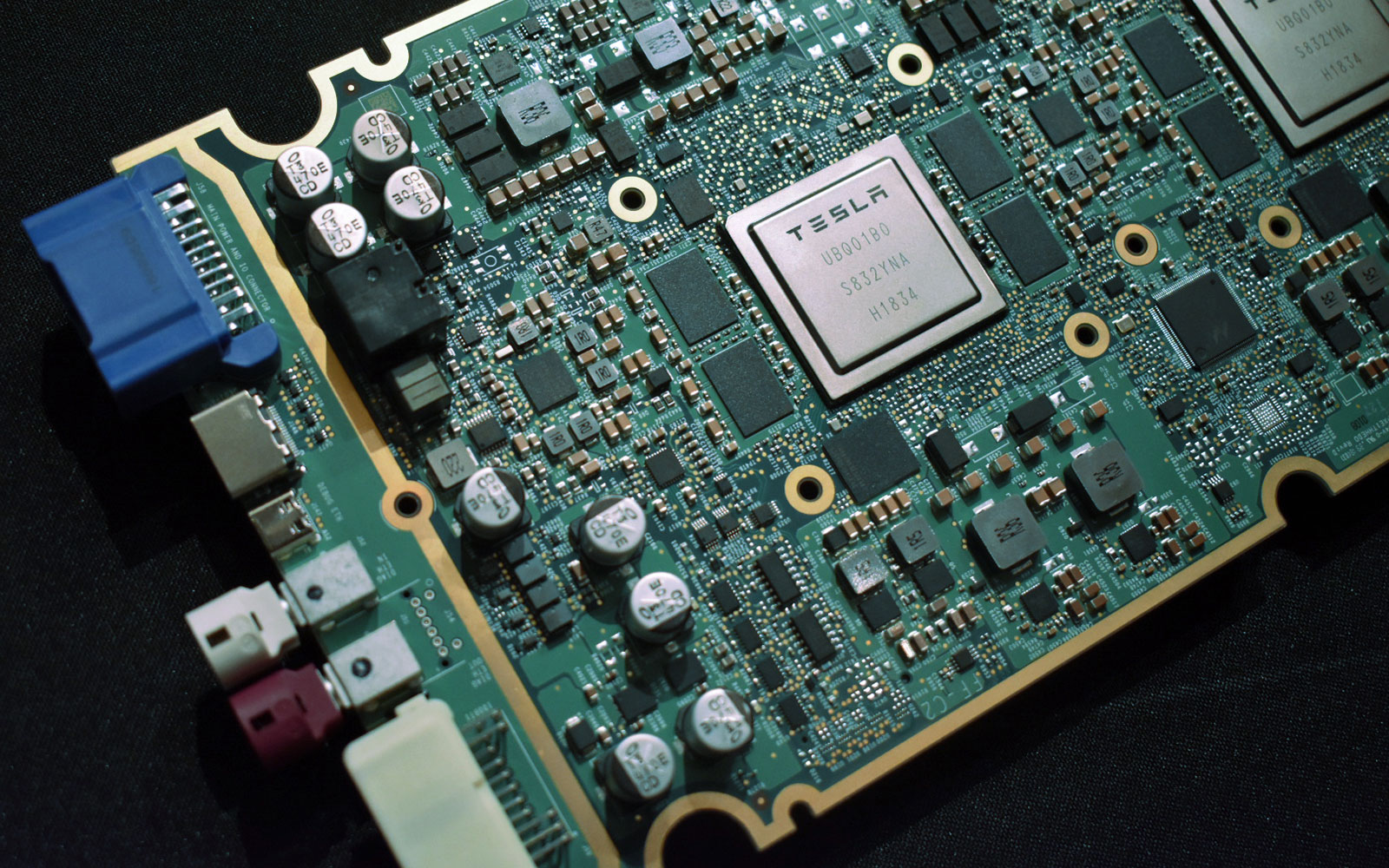

This is the path that Tesla is already walking. The pioneering OEM announced the Full Self-Driving (FSD) platform last year. Developed in-house, the purpose-built SoC (system on chip) is geared specifically towards highly efficient neural network processing at low power.

Why did they take such a radical decision to design their own chip for FSD? Beyond a desire to control the supply chain, they recognized the need for a truly optimized hardware platform for their own AI-intensive workloads. Furthermore, by developing the FSD in-house they have a deep understanding of its capabilities, ensuring the platform will be as efficient as possible (saving more power) and accommodate future updates to their automated driving features.

Tesla released the FSD in 2019 after less than two years of development. On the other hand, AImotive has just released our 3rd generation aiWare3P, an upgraded version of our NN accelerator hardware IP core that's been in development for over four years and was first released back in 2016. One could almost say that Tesla's following in our footsteps...

The Tesla FSD contained the first purpose-built automotive AI accelerator from an OEM.

Our aiWare NN acceleration technology is developed relying on direct feedback from our teams working on automated driving software, and the first-hand experience we have collected while testing our solutions on real roads. As a result, aiWare has grown into the most compelling offering for automotive NN inference on the market. The mature IP provides outstanding power and execution efficiency, while ensuring highly deterministic, ultra-low latency operation.

In our latest release, in addition to performance, more flexible functionality and efficiency upgrades to our hardware, we’ve included a host of new tools in our updated SDK (Software Development Kit). These new tools are designed help with the tough challenges developers face porting NNs trained in laboratories to hardware platforms utilizing our aiWare NN acceleration technologies – challenges we understand because we develop complete vehicle platforms, not just benchmark demonstrations. All that translates into higher performance at lower power consumption – which is exactly what we need to drive mainstream adoption of L2 and L3 autonomous vehicles.

The aiWare Visual Profiler is a mature optimization tool within the aiWare SDK.

Upgradeability hampered by re-certification

Furthermore, while Tesla opted to take the road of a complex and large SoC, which is expensive and difficult to upgrade, we believe for high-performance hardware platforms aiWare is best deployed as an accelerator chip alongside existing or future SoCs. This means aiWare-powered NN accelerator subsystems can be easily upgraded as algorithms continue to improve, without having to replace the entire SoC.

Automotive safety standards require the validation of complete solutions. If changes are made to the hardware platform the complete system must be re-validated and re-certified. However, simply changing an element of the hardware platform, or adding one or two more NN accelerator modules to the platform minimizes the challenges of re-certification, making mid-life upgrades to the hardware platform’s processing power much more viable. Furthermore, adopting an accelerator-based approach means the software interface is simplified, making the software more open – to, for example, changing the NN accelerator itself.

Understanding automotive AI

Relying on our comprehensive understanding of automated driving, AImotive has been working on solving the challenges of deploying automotive NNs on automotive-grade hardware platforms for over four years. aiWare is soon to power L2 ADAS functions in production vehicles and is ready to scale to L3 and above. We have already spent hundreds of person-years investment designing the latest leading-edge AI algorithms, NN architectures and hardware platforms. With aiWare3P we are now delivering one of the most compelling hardware acceleration solutions for automotive NN inference on the market.