Written by Luca Csanády / Posted at 1/14/22

aiDrive 3.0 – the solution for automated driving

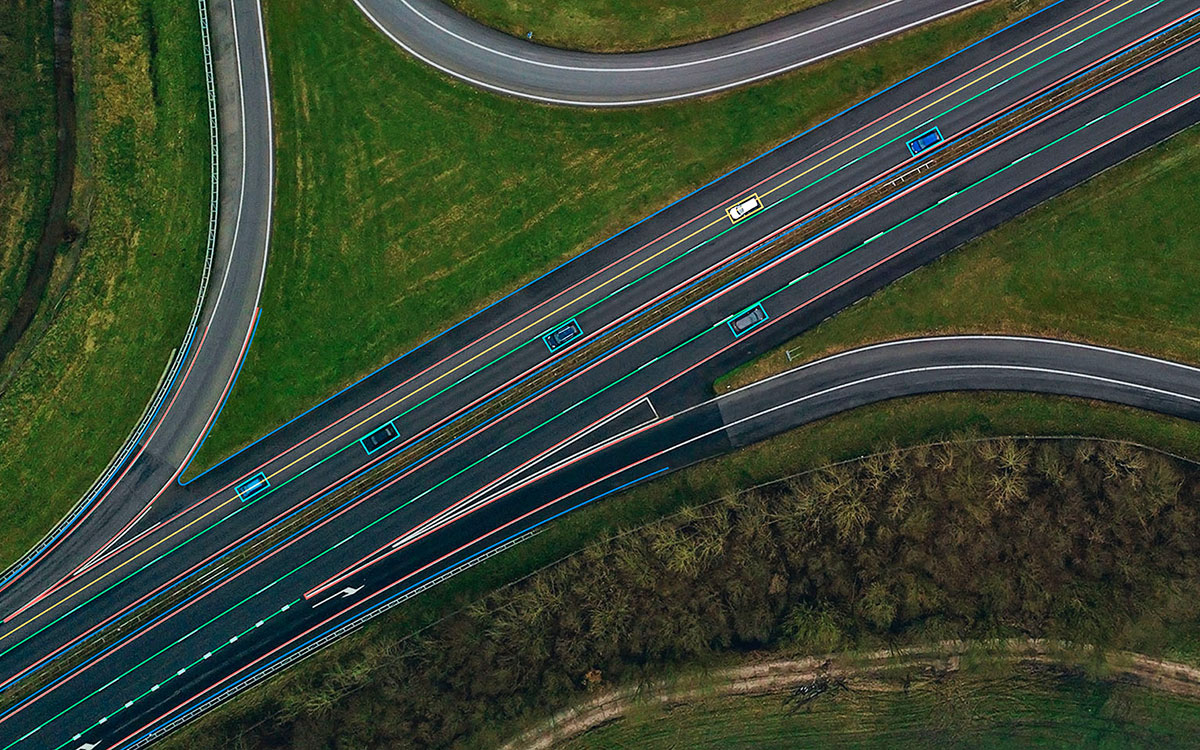

The latest generation of our automated driving full software stack, aiDrive 3.0, has just been released, featuring our in-house model-space based perception, which is the most solid foundation for automated driving solutions.

Automated driving – a bigger challenge than expected

We have seen enormous advances in the complexity of driving automation since the development of assisted driving systems with L2 ADAS functions like adaptive cruise control or lane-keeping – and no step along the way has been easy. Those who have been following the industry from its early days know that only a few years ago many expected to see fully autonomous driving by around 2025. Such predictions now seem far-fetched, and even the availability of L3 systems will probably be very limited by then.

The reason is that the requirements for robust perception and (especially) data for development and validation pose a much more significant challenge than initially anticipated.

The answer: model-space based perception

The foundation of any automated driving software is perception – how the environment around the vehicle is perceived and interpreted. Reliable neural networks (NN) that form the heart of the perception pipeline need large, diverse, contextually rich annotated data sets for NN training and validation.

Existing systems divide the environment reconstruction process into two major phases: firstly, distinct perception algorithms for different types of objects or tasks in 2D image space, followed by several layers of abstraction, transformation, and association/fusion processes. This structure is very limiting, whereas automated driving systems should have a profound, consistent understanding of the environment around them in order to achieve full automation. Any system will need to be AI-based, so our focus should be on both the NN architecture and the collection and labelling of data.

To maintain a quick, complete understanding of the scene around the ego-vehicle, a neural network should be able to produce a simple, unified 3D map of its environment, with road model, object detection, sensor fusion and even trajectory planning in a single network. We strongly believe that the only solution to this problem is a model-space based network.

aiMotive has been developing aiDrive 3.0 with a unified model-space neural network, which can process input from multiple sensor modalities (camera, radar, or LiDAR), can cater for multiple tasks (e.g., lane and object detection), all fed by the output of our automated data annotation pipeline. With aiDrive 3.0 we have set off on the path towards completely data-driven neural network training, allowing for rapid, continuous evolution. This is not unprecedented; Tesla's 4D vector space is a similar concept.

Consequently, having a sufficient amount of the right data is critical for such systems. That is why we have already invested significant effort over the past few years into the development of our own data tools: an automatic annotation pipeline (aiNotate), supported by synthetic data generation (aiData) based on our physics-based simulator, aiSim. Such in-house solutions are completely unmatched in the industry and give us the edge over any other automated driving software supplier. These tools ensure endless data supply, a vast diversity of data, quick development cycles, and the rapid, continuous adaptability of the software to meet changing market needs. These benefits, in turn, allow aiMotive to shorten customers' time to market.

aiDrive 3.0 – a superior solution for automated driving

aiDrive 3.0’s model-space based perception is the foundation of higher-level automation but achieves much more than that. It has several game-changing advancements in comparison with traditional perception pipelines.

First of all, it's reusable across different projects at any automation level. This dramatically reduces the need for repeated data collection and annotation, decreasing development costs and time to market.

Thanks to our virtual sensor technology, it is also very lightweight: the whole stack only requires low-power chips (less than 2x30W) for full L3 functionality.

Furthermore, it delivers superior detection performance, enabling automated driving technology to realize its ultimate goal of making road transportation safer for everyone. This includes, for example, superb results in low-light and adverse weather conditions, radically improved distance estimations, recall and position metrics, as well as outstanding far-range detection, and the list goes on.

For further details and other features, click here.